Introduction

As we approach the 2024 elections, a new threat looms large over the democratic process: AI-driven deepfakes. These highly realistic, digitally manipulated videos and audio files can make it appear as though individuals are saying or doing things they never did. This technology, driven by advances in artificial intelligence, poses a significant challenge to election integrity, potentially undermining trust in the electoral process and swaying public opinion based on fabricated evidence.

What are Deepfakes?

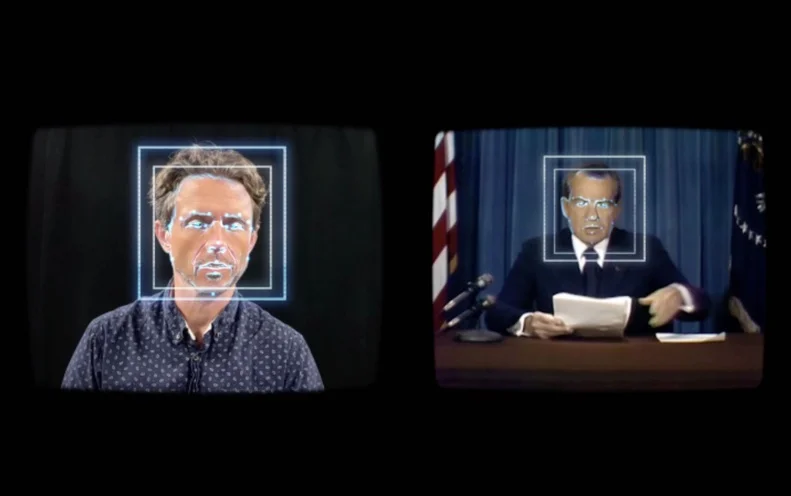

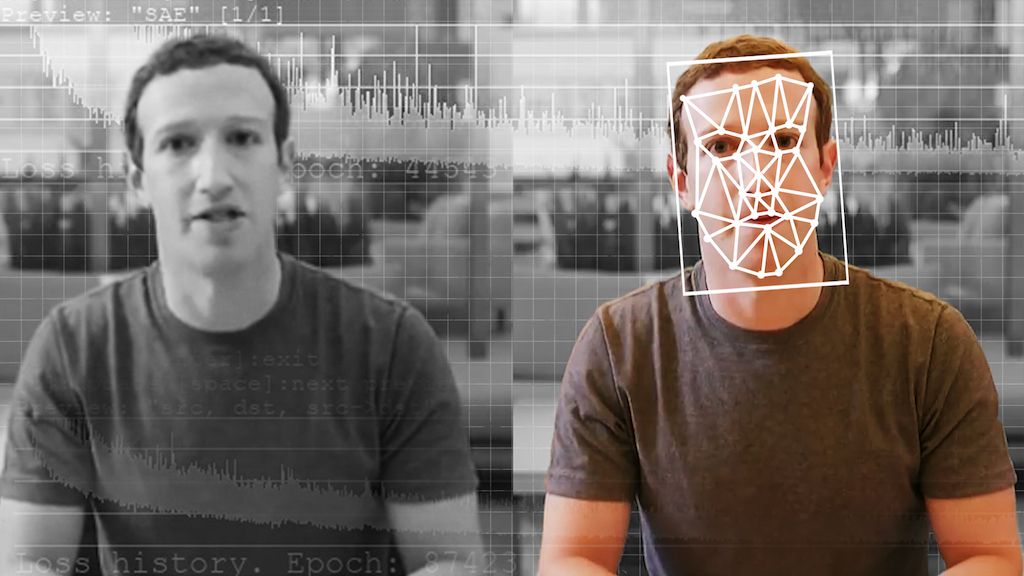

Deepfakes utilize artificial intelligence and machine learning techniques to create hyper-realistic digital forgeries. These can include videos, audio recordings, or images that are indistinguishable from genuine content. By training AI models on vast amounts of data, deepfakes can replicate a person’s likeness and voice with astonishing accuracy, making it possible to produce convincing fake content that can be used for misinformation and manipulation.

The Rise of AI-Driven Deepfakes

The rapid advancement of AI technologies has made the creation of deepfakes easier and more accessible. Tools and software for generating deepfakes are now widely available, allowing even individuals with limited technical expertise to produce convincing forgeries. This democratization of deepfake technology has led to an increase in their prevalence and potential for misuse, particularly in politically sensitive contexts such as elections.

Impact on Election Integrity

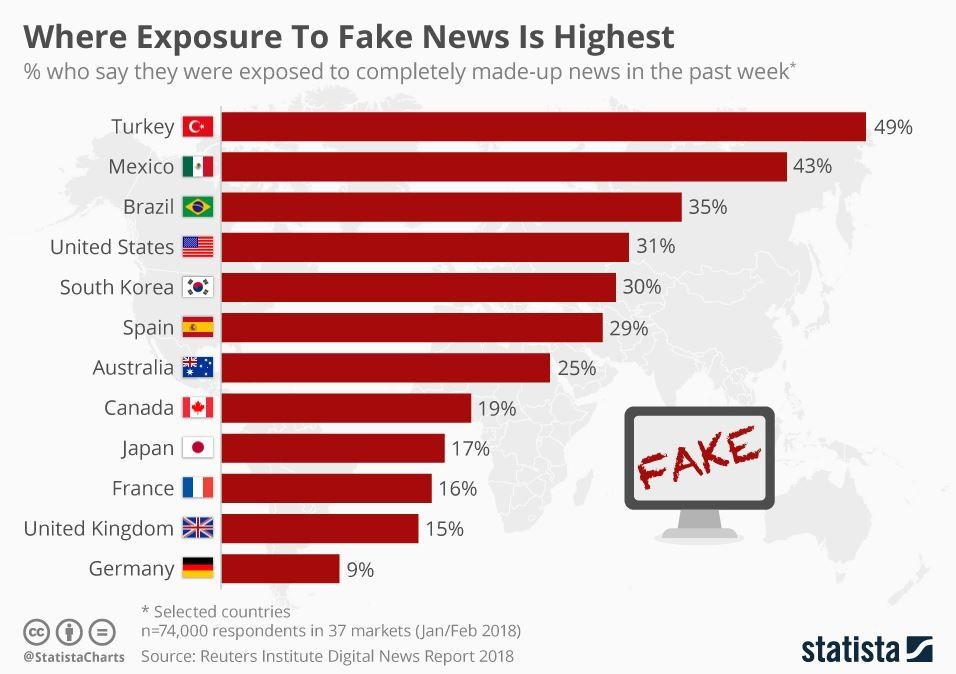

- Misinformation and Disinformation Campaigns: Deepfakes can be weaponized to spread false information about candidates, political parties, and election processes. These fake videos and audio clips can be used to discredit opponents, create confusion, and manipulate voter perceptions.

- Erosion of Trust: The very existence of deepfakes can erode trust in genuine content. As deepfakes become more convincing, voters may begin to doubt the authenticity of all digital media, leading to skepticism and cynicism about legitimate information.

- Voter Manipulation: Deepfakes can be strategically released to influence voter behavior. For example, a deepfake showing a candidate making controversial statements can be timed to go viral just before an election, swaying undecided voters and potentially altering the election outcome.

- Hindrance to Fact-Checking: The realistic nature of deepfakes makes it challenging for fact-checkers to debunk them quickly. By the time a deepfake is exposed, it may have already caused significant damage, spreading misinformation far and wide.

Case Studies and Examples

In recent years, several incidents have highlighted the potential impact of deepfakes on politics. For instance, during the 2020 elections, deepfake videos purportedly showing prominent politicians making inflammatory statements circulated on social media. While many were quickly debunked, their initial impact was considerable, highlighting the speed and extent to which misinformation can spread.

Combating the Threat of Deepfakes

- Technological Solutions: Developing AI-driven tools that can detect and flag deepfakes is crucial. These tools analyze metadata, digital fingerprints, and inconsistencies within the media to identify forgeries.

- Regulatory Measures: Governments and regulatory bodies need to establish clear guidelines and penalties for the creation and distribution of deepfakes, particularly in the context of elections. Legislation can act as a deterrent and provide a framework for addressing deepfake-related offenses.

- Public Awareness and Education: Educating the public about the existence and potential dangers of deepfakes is essential. By raising awareness, voters can be more discerning about the media they consume and share.

- Collaboration with Tech Companies: Social media platforms and technology companies play a critical role in the fight against deepfakes. By implementing robust content moderation policies and detection algorithms, these platforms can help limit the spread of deepfake content.

- Support for Journalism and Fact-Checking: Strengthening independent journalism and fact-checking organizations can help counteract the spread of deepfakes. Providing resources and support for investigative journalism ensures that false information is swiftly debunked and accurate information is disseminated.

Conclusion

As we move towards the 2024 elections, the threat posed by AI-driven deepfakes cannot be underestimated. These digital forgeries represent a new frontier in the battle for election integrity, capable of undermining trust, spreading misinformation, and manipulating voter behavior. Combating this threat requires a multi-faceted approach, involving technological innovation, regulatory measures, public education, and collaboration with tech companies. By addressing the challenges posed by deepfakes, we can help safeguard the democratic process and ensure that elections remain fair and credible.